With the project nearly done, I was given the job of putting together the end credits. Initially, some members of the group suggested that I just do a "Created by..." credit and leave it at that, but I thought it would be good if I listed each of our jobs/contributions within the project. I started by typing out the credits in TextEdit, while confirming with the rest of my group who did which job.

Im Adobe Premier, I started copying the credits into title stills, which we decided would appear in the Helvetica font.

When we were filming the green screen sequence, we did quite a lot of filming of us just mucking around, so we decided that this would look good alongside the credits. I went through this footage in Premier and selected a few clips to go against the credits and then exported that to another sequence.

Next, I imported this video into the credit sequence and I then scaled the video down and placed it right next to the credits. I made the length of the credits slightly longer, so that the video would fit around them and keyed it so the video fades towards the end of the credits. This is an example of very basic compositing to get our credits done. Here is the outcome.

Sunday, 27 March 2011

Saturday, 26 March 2011

Compositing the creature: Shot 19

The second shot that I was required to composite was the 19th shot of the piece; this largely involved applying shadows to the scene, so that the creature would blend in with the various shades. Here is the original live action footage.

In order to integrate this creature into this footage, I opened the 3D blockout that was lighted by Matt and had the creature animated by Will.

The idea was to render the creature's running separately to the shadows. How I did this was by selecting the lambert textured surfaces that made up the wall and floor, and then assigning them to a separate rendering layer.

This layer was called layer1. I turned this layer off and rendered only the masterLayer that included the creature. I rendered the creature's run in mental ray as we had agreed as a group, here is a still from the batch render.

Next, I turned off the masterLayer and turned on Layer 1. I assigned a material to the surfaces called 'Use Background', which meant that they would not be visible, but that they would still pick up the shadows of the creature and the wall. I rendered this using Maya Software, because mental ray was not capable of rendering the shadows out properly. Here is a shot of the rendered shadows.

The next stage was to import these two into After Effects against the live action footage.

I scaled down the rendered sequences of both the creature and shadows so that the shadow is aligned with the building to the right and so the creature can run through the scene. I made sure both the shadow layer and creature layer were positioned and scaled identically.

The rendered images were initially too small within the live action, meaning that the creature just randomly appeared on screen rather than creating the illusion of the creature running on screen. In order to fix this, I just scaled up the live action footage and CG footage so that the creature seemed to run on screen and into the shade of the council estate.

Next, I needed to amend the shadows; they were initially too solid to be considered shadows, so I applied a Gaussian Blur to make them more natural. Finally, I discovered that the shadow of the creature was coming out white, so to amend this, I applied a Set Channel and changed all the settings to Alpha so that it was a darker grey colour.

Here is the final, fully composited shot of the chase.

Compositing the creature: Shot 6

In the post production process, we needed to composite the creature into each shot. This also involved the use of lighting and shadow to blend the creature in with the rest of the scene. We were each given some shots to composite, based on the blockouts of the scene and the lighting that had been set up by Matt.

The shots that I was given were, shot 6; which is where the creature emerges from the manhole, and shot 19; in which the creature chases Richard around a corner.

The first that I will talk through is shot 6. Here is the original video.

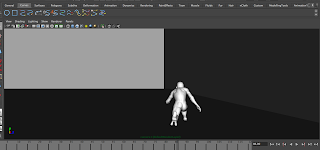

And here is a screenshot of the animation in Maya that I had to integrate into this scene.

I rendered the Maya Animation out in mental ray and I then imported it into After Effects with the live action footage.

The thing about this shot is that I needed to make it look as if this creature was emerging from a hole, as opposed to off camera. I needed to create a hole for this creature to emerge from. In order to create this hole, I simply created a mask in the live action footage in the area of the manhole.

I then feathered the mask so the hole wasn't too rigid. At the moment, the hole just looks black and doesn't have any depth. In order to fix this, I looked for an image of inside the manhole to put under the masked layer, so that it looked like it led to a sewer or somewhere. I came across this image.

I put this image under the live action footage and around the mask. I then applied a colour correction in order for this image to fit in with the scene and here is how it looked.

Here is the final composited shot.

The shots that I was given were, shot 6; which is where the creature emerges from the manhole, and shot 19; in which the creature chases Richard around a corner.

The first that I will talk through is shot 6. Here is the original video.

And here is a screenshot of the animation in Maya that I had to integrate into this scene.

I rendered the Maya Animation out in mental ray and I then imported it into After Effects with the live action footage.

The thing about this shot is that I needed to make it look as if this creature was emerging from a hole, as opposed to off camera. I needed to create a hole for this creature to emerge from. In order to create this hole, I simply created a mask in the live action footage in the area of the manhole.

I then feathered the mask so the hole wasn't too rigid. At the moment, the hole just looks black and doesn't have any depth. In order to fix this, I looked for an image of inside the manhole to put under the masked layer, so that it looked like it led to a sewer or somewhere. I came across this image.

I put this image under the live action footage and around the mask. I then applied a colour correction in order for this image to fit in with the scene and here is how it looked.

Here is the final composited shot.

Thursday, 24 March 2011

Some action figure close-ups

Towards the end of the sequence, the parkour runner and creature morph into action figures and in a surprise twist, the scenario is just a product of our tutor, Jared's imagination. It was my job to key out the green screen in the close-up shots of both action figures and apply a rendered CGI background.

Here are each of the clips, which have also had the brightness adjusted so they blend in with the environment.

This last shot had a lot of noise in it, so I had to apply a mask, which I then key framed in order to remove some of it. Even then, there was still a bit of noise left in areas that were too close around the action figure to key out adequately. I have found that noise tends to come up on green screen significantly more than blue screen.

Here are each of the clips, which have also had the brightness adjusted so they blend in with the environment.

This last shot had a lot of noise in it, so I had to apply a mask, which I then key framed in order to remove some of it. Even then, there was still a bit of noise left in areas that were too close around the action figure to key out adequately. I have found that noise tends to come up on green screen significantly more than blue screen.

The shaking manhole

Along with the green screen shots, I was given some shots to render and then composite. The first being the close up shot of the manhole, which suddenly begins to shake. Not only was I required to render this manhole and composite it with live action, but I was required to animate the shaking.

My team members stated that the manhole needed to be shake in a subtle but menacing movement. Here is what I came up with.

Next, I rendered this in the mental ray format and I then masked out the lambert texture around the manhole.

The thing about this shot is that the dark shades on the manhole do not match the brightness of the ground surrounding. I amended this by applying a Curves filter to the live action footage so that I could alter the brightness and make it darker.

In the outcome, the footage looked overall much darker and this suited the mood that we were trying to convey in this shot.

My team members stated that the manhole needed to be shake in a subtle but menacing movement. Here is what I came up with.

Next, I rendered this in the mental ray format and I then masked out the lambert texture around the manhole.

The thing about this shot is that the dark shades on the manhole do not match the brightness of the ground surrounding. I amended this by applying a Curves filter to the live action footage so that I could alter the brightness and make it darker.

In the outcome, the footage looked overall much darker and this suited the mood that we were trying to convey in this shot.

Thursday, 17 March 2011

Richard drops

Another shot that I was given to composite was a shot of Richard dropping from a massive jump down a flight of stairs. Producing this composition involved layering a green screen shot of Richard jumping from a chair, with a CG shot of the staircase and floor that he eventually lands on.

Here is the green screen footage and a rendered close up of the staircase.

There is some very recognisable camera movement in the video, particularly when he lands. I decided that I would motion track it in Match Mover. Here is a shot of the sequence in Match Mover.

Match Mover seemed to track the camera movement fairly efficiently. However, when I exported the motion tracking to a camera in Maya. I found that the camera just remained static; the camera movements were not significant enough to be applied to the camera. Instead, I just went straight into After Effects and composited the live action and CGI together. I also applied masks around the tape on the green screen, to make them invisible.

Here is the green screen footage and a rendered close up of the staircase.

There is some very recognisable camera movement in the video, particularly when he lands. I decided that I would motion track it in Match Mover. Here is a shot of the sequence in Match Mover.

Match Mover seemed to track the camera movement fairly efficiently. However, when I exported the motion tracking to a camera in Maya. I found that the camera just remained static; the camera movements were not significant enough to be applied to the camera. Instead, I just went straight into After Effects and composited the live action and CGI together. I also applied masks around the tape on the green screen, to make them invisible.

Motion tracking Richard's run

The following shot that I will be talking about involves our runner, Richard running away before being cornered against a graffiti wall. In order to make this shot believable, I used motion tracking, as well as masking and green screen. Here is the original footage that we recorded in one of the studios at uni.

The green screen is where I will place the CG environment. The pieces of sellotape that we have place on the green screen are what we will use to track the movement and speed of the camera when we key out the green screen. They will also be used by Match Mover, a program that will track and reproduce the camera movements, so I can export them to a camera in Maya, meaning that the cameras in both the live action and CGI will move in sync with each other.

The next stage was to load the sequence in Match Mover. In this program, I applied Automatic Tracking, which tracks the camera movement in each shot. After this tracking was applied, I used the clean assistant to reduce the number of tracks, so that the camera movement was not too erratic. Here is how the scene looks within Match Mover after an Automatic Track.

The green lines in this shot display the movements that have been tracked. Not only does the Automatic Track capture the camera movement, but also Richard's movements. At first, I didn't think this was a problem, but when I exported the camera movements into Maya, I found that the camera moved really erratically and even moved out of the scene. I could not figure out why the camera was moving like this.

After showing this problem to my tutor, he advised that I delete all of the trackers the are near or on Richard's figure, because the camera was tracing his movements, as well as the movements of the camera. I went through the whole sequence within Match Mover and deleted each tracker that appeared on Richard. Here is the same shot, after I had gone through the sequence.

I exported these camera movements to Maya. I then applied them to the relevant scene, where I made a few manual arrangements, such as deleting a few unnecessary key frames and making the movement smoother in the Graph Editor. Here is an unrendered Playblast of the camera movement in the chase scene.

I now needed to composite this with Richard's running sequence, and the desired outcome would be that Richard's run would move in sync with the camera movements in Maya. In After Effects, I uploaded both the camera sequence in Maya, fully rendered, and the green screen footage of Richard running. I overlaid the live action footage over the green screen footage and then I keyed out the green screen, with a Keylight filter.

The white of the walls around the green screen was still present. I didn't key out the white, because keying out any colour, other than green or blue would have caused problems, as it would start keying out things like Richard's skin tone and clothing. In order to get rid of the white walls, I place Masks around them, which I then keyframed as they moved around.

In order for Richard to look as though he is running towards the wall, I keyfamed the position and scale of the video footage, to create the illusion of Richard running away from the camera. As a finishing touch, I applied a Brightness and Contrast filter, which I then keyframed to make Richard's figure become darker as he ran into the darkness, which would highlight how he is running into peril.

The next stage was to load the sequence in Match Mover. In this program, I applied Automatic Tracking, which tracks the camera movement in each shot. After this tracking was applied, I used the clean assistant to reduce the number of tracks, so that the camera movement was not too erratic. Here is how the scene looks within Match Mover after an Automatic Track.

The green lines in this shot display the movements that have been tracked. Not only does the Automatic Track capture the camera movement, but also Richard's movements. At first, I didn't think this was a problem, but when I exported the camera movements into Maya, I found that the camera moved really erratically and even moved out of the scene. I could not figure out why the camera was moving like this.

After showing this problem to my tutor, he advised that I delete all of the trackers the are near or on Richard's figure, because the camera was tracing his movements, as well as the movements of the camera. I went through the whole sequence within Match Mover and deleted each tracker that appeared on Richard. Here is the same shot, after I had gone through the sequence.

I exported these camera movements to Maya. I then applied them to the relevant scene, where I made a few manual arrangements, such as deleting a few unnecessary key frames and making the movement smoother in the Graph Editor. Here is an unrendered Playblast of the camera movement in the chase scene.

I now needed to composite this with Richard's running sequence, and the desired outcome would be that Richard's run would move in sync with the camera movements in Maya. In After Effects, I uploaded both the camera sequence in Maya, fully rendered, and the green screen footage of Richard running. I overlaid the live action footage over the green screen footage and then I keyed out the green screen, with a Keylight filter.

The white of the walls around the green screen was still present. I didn't key out the white, because keying out any colour, other than green or blue would have caused problems, as it would start keying out things like Richard's skin tone and clothing. In order to get rid of the white walls, I place Masks around them, which I then keyframed as they moved around.

In order for Richard to look as though he is running towards the wall, I keyfamed the position and scale of the video footage, to create the illusion of Richard running away from the camera. As a finishing touch, I applied a Brightness and Contrast filter, which I then keyframed to make Richard's figure become darker as he ran into the darkness, which would highlight how he is running into peril.

Wednesday, 16 March 2011

Compositing the green screen in After Effects

When it came to the post production stage of this project, I put myself forward to do the green screen parts which happen right at the end of the piece, when our protagonist (Richard is his name) gets cornered in an underground parking area. This would involve a mixture of moving and static camera shots.

The first shot that I worked on for this part was a mid shot of Richard cowering in fear against the graffiti wall. This shot was relatively simple as I only had to use After Effects to composite this and a rendered shot of the graffiti wall made by Nat.

Here is the original green screen footage:

In order to match his pose with the action figure that he eventually morphed into, I flipped the video footage horizontally.

Here is the rendered close up of the green screen.

In After Effects, I composited these together by applying a Keylight filter to the green screen footage, that keyed out any shade of green, which which would allow Richard to appear against the graffiti wall. I scaled the graffiti wall until it looked as though it was very close to the camera in relation to Richard. I then applied Masks around each motion tracker, in and set them to "subtract" the motion trackers so that they were absent and only Richard was present.

As you can see from this image, Richard does not fit in with the rest of the environment because he very bright, compared to the dark and eerie surroundings. In order to solve this, I applied a Curves filter and I then reduced the brightness, so Richard was absorbed in the peril within the scene.

The first shot that I worked on for this part was a mid shot of Richard cowering in fear against the graffiti wall. This shot was relatively simple as I only had to use After Effects to composite this and a rendered shot of the graffiti wall made by Nat.

Here is the original green screen footage:

In order to match his pose with the action figure that he eventually morphed into, I flipped the video footage horizontally.

Here is the rendered close up of the green screen.

In After Effects, I composited these together by applying a Keylight filter to the green screen footage, that keyed out any shade of green, which which would allow Richard to appear against the graffiti wall. I scaled the graffiti wall until it looked as though it was very close to the camera in relation to Richard. I then applied Masks around each motion tracker, in and set them to "subtract" the motion trackers so that they were absent and only Richard was present.

As you can see from this image, Richard does not fit in with the rest of the environment because he very bright, compared to the dark and eerie surroundings. In order to solve this, I applied a Curves filter and I then reduced the brightness, so Richard was absorbed in the peril within the scene.

Tuesday, 15 March 2011

Blocking out

Each of us was required to produce three 3D blockouts of various shots within the piece. This was required to make sure that the lighting and in the CG footage matched that of the lighting in the live action. The lighting and shadowing is going to be an important factor in determining how effectively our swamp monster blends in with the rest of the scene.

This is the manhole shot, which the swamp monster will emerge from. I modelled a hole that the swamp monster will come out of. When we place this manhole in the live action, it will just be a matter of masking out the grey lambert shaded polygon area.

The next two shots were trickier to model, because I had to create a 3D representation of a 2D image that was identical in perspective, but would also balance the light accurately. Drawing a 2D image of a live action reference is relatively easy, as you can capture the levels of perspective easily, through techniques such as foreshortening and light. However, to recreate a 2D image through 3D modelling is harder, because you need to create models that have one side much shorter than the other but also needs to capture the light properly.

Here are the screenshots of my attempts at recreating two shots.

In this shot, it was particularly hard to model the ground so it looks flat but is still accurate in relation to the perspective in the live footage.

I showed these to my team mate, Matt, who is in charge of lighting, and he said they should be fine to work with.

Friday, 4 March 2011

Transferring UVs from one model to the other

One issue we had in our production pipeline was that the swamp monster was being textured and UV mapped by other team members, while I was rigging the model in a separate file. This could have meant that one of us would end up doing two lots of work to apply the rigging and texturing to one single model to be taken into the animation process.

However, after looking at videos on Youtube to find out if there is a means of transferring the UVs onto another model, I have found it. Here is a video that I made for the benefit of my fellow team members to demonstrate what I had learnt.

Wednesday, 2 March 2011

The Swamp Monster rigging is finished

I have finally completed the rigging of the swamp monster, so I decided to make this video to demonstrate how the rig works. I made this largely for the benefit of Will, who will be animating the swamp monster and it is intended as a guide on how to operate the rig.

Subscribe to:

Comments (Atom)